Themes I’m exploring this week 🤔

The implications of ‘Democratic AI’

It is a good time to be an aspiring AI entrepreneur. We are rapidly shifting towards an AI democracy. By this, I mean a world in which companies can incorporate state-of-the-art AI functionality into their products with a decreasing need for specialist ML expertise.

Advances in deep transfer learning and a maturing AIaaS ecosystem have made AI more accessible than ever before.

AI has always been somewhat democratic. Open-source philosophy is central to the field. Architectures and pre-trained models are mostly public — having been built in academic settings. The difference between then and now is the question of who is allowed to play.

I would describe the past decade as one of Athenian democracy. Only adult male Athenian citizens (data scientists and ML engineers) who had completed their military training as ephebes (STEM postgraduate degrees) had the right to vote in Athens (build ML models). Building AI products required access to vast amounts of data, as well as an understanding of how to properly clean and label this data. It required an understanding of the intricacies of frameworks like TensorFlow and PyTorch to develop and train custom models. While considerably more accessible than the feudalism that preceded it - this period was by no means democratic in the way we define the word today.

However, we are fast approaching a true democracy.

Entrepreneurs can now build and deploy applied AI products for well under $50k - and in months, not years. For example, for $100-400/month, startups integrate the astonishingly powerful GPT-3 language model into their products using OpenAI’s API. What’s more, this API is a general-purpose “text in, text out” interface. Given any text prompt, the API will return a text completion, attempting to match the pattern given to it. In this way, it can be “programmed” by simply showing it a few examples of what you’d like it to do. There are no requirements for feature engineering, model selection, architecture best-practices or hyper-parameter optimisation. In fact, this GPT-3 startup built and launched it’s product using basically no-code whatsoever (by using Bubble.io)

Such democratisation of AI is not unique to OpenAI. Using Google’s AutoML, companies can train a custom image classification model (fine tuned to their specific use case) for a cost of <$200. It uses a drag-and-drop interface for model training. No model code is required.

Amazon, IBM and Microsoft all have comparable ‘Auto AI’ APIs on the market, covering computer vision, speech, translation and text analytics. These platforms are low-code and are based on few-shot learning, Any developer with a basic understanding of consuming REST APIs can use these services to build powerful, custom AI models.

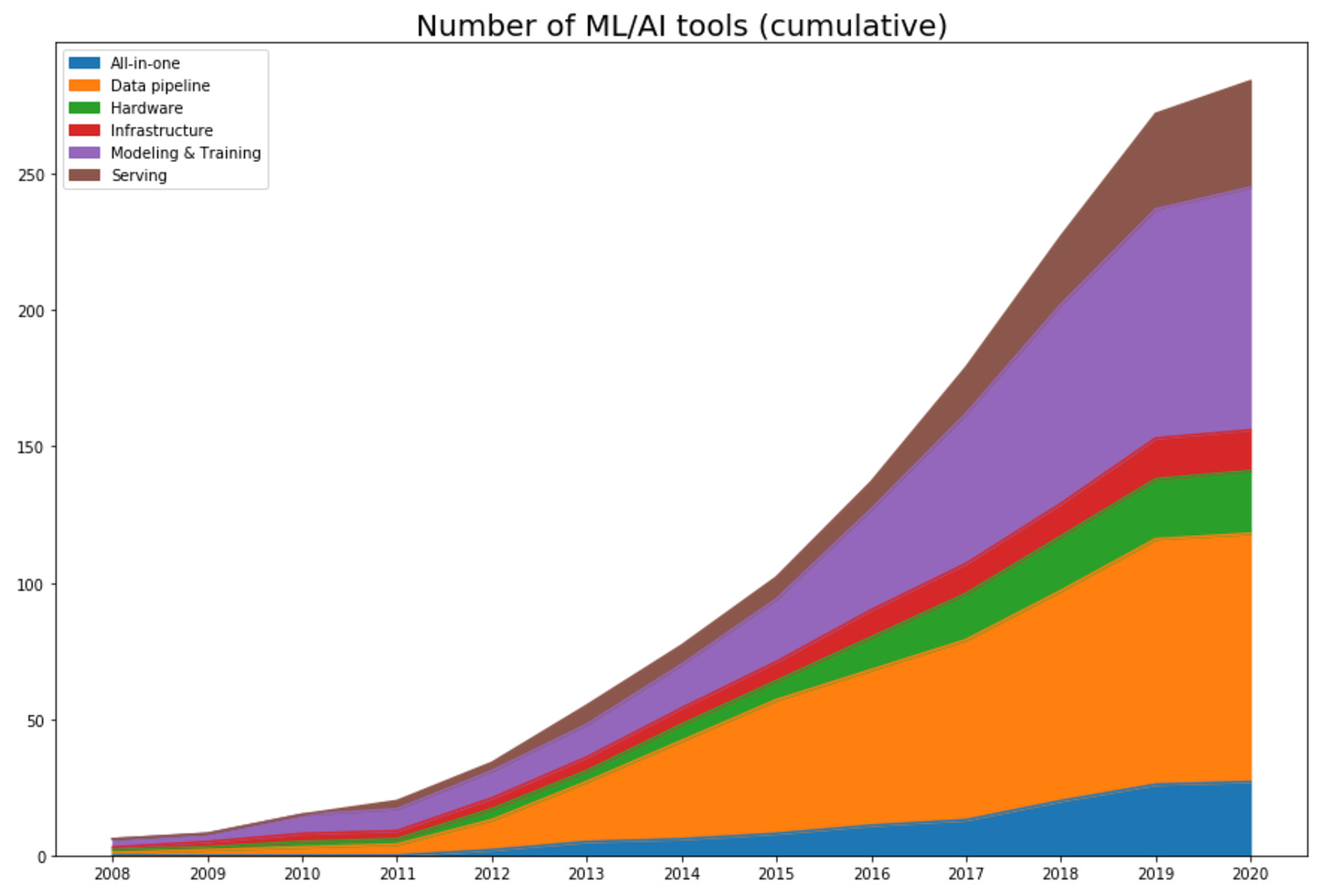

Aside from pure Auto AI, there has been a marked rise in the number of MLOps tools over the past few years - covering the full spectrum of operations.

Source: Chip Huyen

While most of the ‘all-in-one’ services have been around for ~3 years, the past 12 months have seen a considerable increase in the breadth and depth of their capabilities.

This has dramatically lowered the barriers to entry for AI startups.

What are the implications of this?

TLDR

{↑ Auto AI APIs = ↓ startup costs + ↓ barriers to entry ⇒

↑ innovation + ↑ competition};

{↑ innovation ⇒ ↑ Applied AI exits ⇒ ↑ TAM for Auto AI APIs ⇒ ↑ innovation};

// in an infinite loop

{↑ competition ⇒ ↑ difficult to achieve venture scale (all things equal};

The clear implication of this greater accessibility is that we are in the midst of a Cambrian explosion of Applied AI innovation. This is a welcome development for the sector. I believe that it will unlock a growth loop, in which improvements in ‘Auto AI APIs’ leads to more innovation, which results in more successful Applied AI exits. This in turn drives a growth in demand for Auto AI APIs, which leads to increased investments in the breadth and depth of their capabilities. This then leads to further innovation, and so on.

For startups, however, lower barriers to entry implies a marked increase in competition. Previously, AI startups may have had a 12-18 month head-start, driven by their proprietary models and datasets, as well as a shortage of ML talent. However, competitive advantages are narrowing, given the increasing accessibility of AI.

This necessitates a fundamental change in the strategic playbook.

Below are 3 factors that I believe should be requirements for VCs and founders looking to succeed in this new paradigm.

The startup playbook in a world of democratic AI

Disclosure:

I believe that these principles apply to the 80% of startups that are using AI to address non ‘mission-critical’ problems. This makes up the vast majority of AI startups in the MediaTech sector. Companies like Gong, Trax, Otter, and Drift.

The 20% that are addressing ‘deep-tech’ applications (e.g. in defence, life sciences, materials) will be more sheltered from these new entrants, and will play by very different rules.

Factor 1: Defensibility should be the number one priority

How does one compete in an increasingly commoditised market, with limited early mover and scale advantages? Build moats from day one.

Network effects and switching costs are the two best moats an AI startup can use to build defensibility in the absence of any major technological differentiation.

Network effects

From day one, startups should create an ecosystem for network effects to thrive. The most effective way to do so is to adopt a marketplace or platform business model. Combined with being an early mover, this can lead to a sustainable competitive advantage. Note - companies should not rely purely on ‘data network effects’ - this is misguided.

Startups that have done this well: Lilt offers AI translation services for enterprise. Unlike Google Translate, it is a 2-sided marketplace, consisting of freelance translators on one side and enterprises needing accurate translation services on the other. It leverages AI to rapidly speed up the process for the translators. This ‘augmented’ model enables it to benefit from network effects.

Switching costs

By making it inconvenient for users to migrate to a competing product, startups can create stickiness - even in the absence of technological differentiation. This enables them to better retain users in the face of tough competition. The most relevant switching costs for Applied AI startups are:

[Switching cost 1: Data traps]

Data traps emerge when customers create or purchase content that is exclusively hosted on the product. Leaving one product for another forces customers to let go of the content or activity, since this cannot be migrated to another product.

Startups that have done this well: Suki is a voice-based, AI digital assistant for doctors. Doctors have major disincentives from leaving the product - since this would force them to abandon their entire archive of patient notes.

[Switching cost 2: Learning costs]

The emotional value of users mastering a product is highly under-appreciated. Frictionless onboarding is essential - and has been a huge growth driver for the likes of Zoom and TikTok. However, once users are onboarded, there is value in creating a continuously steep learning curve and emotively rewarding users for mastering a product’s features. The more time users invest in learning a product, the less incentivised they are to abandon it.

Startups that have done this well: DataRobot democratises data science by automating the end-to-end journey from data to value. Although highly accessible, mastering the product requires a considerable number of learning hours. Users are referred to as ‘Heroes’ once they have mastered the platform.

[Switching cost 3: Embedding]

Integrate with everything! Embedding works when products are integrated deeply into a customer’s existing tech stack. The more integrations, the more likely it is that churn will inconvenience users.

Startups that have done this well: People.ai integrates into CRM systems like Salesforce and automatically inputs relevant data from email, calendars, Slack chats and more.

Factor 2: Optimise your market positioning

If you can, do things that others can’t do. If your unique advantage is specialist ML expertise, the best thing to ward off competition is to build models that are better than anything that can be achieved with off-the-shelf solutions. Most startups can build a model that can achieve between 80–90% accuracy. However, it becomes exponentially harder to increase accuracy beyond this. Achieving best-in-class accuracy where this is required can be a solid differentiator for startups.

The best way to achieve this is through a superior data-strategy. This combines having (i) access to proprietary data; (ii) a unique process for combining and enriching data from publicly available sources; (iii) a process for gathering data in a scalable and cost effective way; (iv) a process for creating reliable synthetic data (eg using GANs).

However, it is crucial to validate these accuracy requirements with users before assuming that this is what they want. For many use-cases, approximation will be sufficient. The worst thing to do is to spend years in development, chasing the final 10% - only to find out most users are satisfied with 90%. The best thing to do is to get products out fast, speak to users, and iterate where necessary.

Startups that have done this well: Affectiva is seeking to improve emotional analysis based on facial expressions and voice for demanding use cases like the automotive industry. This cannot be achieved by heuristic coding or a simple rules-based approach.

Factor 3: Create a UX advantage

A byproduct of the shift to the ‘API economy’ is an increasing necessity for startups to have best-in-class product, UX and marketing. Suppliers like AWS, Stripe, Auth0, Algolia and Segment handle what was once considered core technology.

I expect the number of third-party suppliers to continue to increase over time (mirroring traditional supply chains like automotive and manufacturing). This will place more emphasis on the less functional aspects of a startup - most notably, the ‘sense of joy’ that customers feel when they use a product.

Founders should strongly consider roles like Head of UX as early hires - despite these roles being traditionally associated with later stage companies.

News from this week 🗞

Gaming 🎮

Game-based learning platform Kahoot! could be the first European IPO of the New Year. With the boom in digital learning and zoom-based quizzes among family and friends, Kahoot has amassed 24 million active users and is valued at $5.7 billion. Link

Educational gaming platform Prodigy raises a $125m Series B. Prodigy is known for its interactive games that teach kids maths. It is one of the fastest growing EdTech companies in North America and has >100m registered users. Link

Roblox has acquired Loom.ai for an undisclosed price to enable its users to create realistic 3D avatars. In addition, Roblox said that the company is postponing its initial public offering to 2021. Loom.ai surfaced in 2016, with the goal of automating the creation of 3D avatars from a single selfie. Today, Roblox said Loom.ai enables real-time facial animation tech using deep learning neural networks, computer vision, and visual effects. Link

Indian online gaming platform Zupee raised $10 million. Zupee has now raised a total of $19 million, including the $8 million funding in April 2020. Founded in 2018, Zupee allows users to play live quiz tournaments across a range of topics including movies, sports, spellings, maths, and general interest topics on their smartphones. Link

Esports sponsorship and audience analytics firm Cavea has raised €1m. Cavea’s AI-based sponsorship and advertising platform provides organisations, tournaments, brands, agencies, content creators and streamers with solutions to help manage, collect, track and value marketing content data for all available channels. Link

Twitch and Facebook Gaming set new monthly viewership records in December, at 1.7 billion hours and 388 million hours watched, respectively. Link

Audio 🎧

Descript raises $30M to build the next generation of video and audio editing tools. Descript builds tools that let creators edit audio and video files by using, for example, natural language processing to link the content to the editing of text files, has picked up $30 million in a Series B round of funding. Link

AR/VR 🕶

Steam reports 1.7 million new VR users in 2020 and a 71% increase in VR game revenue. Link

Video 📹

Blabla, a Chinese startup that aims to teach English through short, snappy videos, has raised $1.54 million in a seed round led by Amino Capital, Starling Ventures, Y Combinator, and Wayra X. Link

Roku has agreed to acquire the rights to content from Quibi, a deal that will make the defunct short-form streaming service’s shows available on the biggest streaming-media player in the U.S. Roku paid less than $100 million to acquire the shows, says the WSJ. This is significantly less than the $1.7bn Quibi spent on it’s product and content before launching. Link

Other 🤷♂️

Startup investing in the U.S. reached a record high of $130 billion in 2020, according to a new Money Tree report from PwC/CB Insights. That's up 14% from 2019, according to the report, though fewer companies raising much larger rounds drove the surge. Link

India’s biggest online-education startup, Byju’s, is acquiring brick-and-mortar test prep leader Aakash Educational Services for $1 billion, according to Bloomberg. The deal will be one of the largest edtech acquisitions in the world and is set to close in the next two or three months. Byju's, based in Bangalore, is valued by its investors -at $12 billion. Link

Apple and Google removed Parler from their app stores, because they say it isn't effectively moderating posts arguing for political violence in the USA. Amazon gave it 24 hours notice to get off AWS for the same reason and Twilio (SMS authentication) cut it off too. Link

OpenAI’s new DALL-E model can create images from text - however random - "a cube made of porcupine", or "a hedgehog wearing a red hat, yellow gloves, blue shirt, and green pants." Very cool. Link

The UK competition regulator (the CMA) is opening an investigation into Chrome's decision to kill cookies. As the CMA acknowledges, this is a big regulatory conflict between privacy (which has its own regulator, the ICO) and competition. Google and Apple are using their dominance of the browser market to close down cookies, which is good for user privacy, but bad for advertisers (which compete with Google) and good for other parts of their businesses (search advertising and the app store respectively). Link

Interesting data from this week 📈

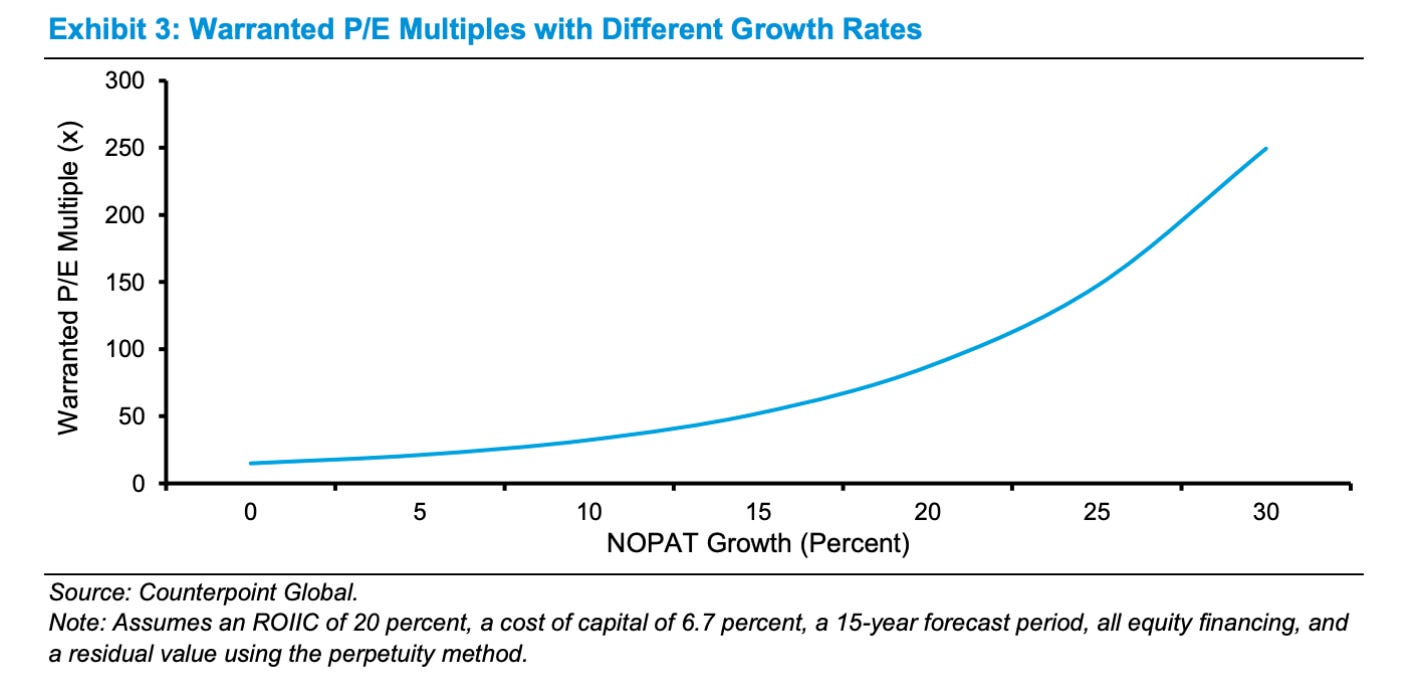

According to Michael Mauboussin’s recent paper, The Math of Value and Growth, a company growing faster should enjoy a multiple that grows geometrically with the growth rate, not linearly.

He included this chart which shows the P/E multiple on the y-axis and NOPAT (Net Operating Profit After Tax) on the x-axis.

The key point is that a company growing faster should enjoy a multiple that grows geometrically with the growth rate, not linearly. Therefore, multiples should follow a power-law - a geometric shape as a function of growth rate.

Source: Thomasz Tunguz

Thank you for reading✌️